from datasets import load_dataset

from textplumber.report import plot_confusion_matrix, get_label_names, save_results, plot_logistic_regression_features_from_pipeline

from textplumber.vader import VaderSentimentEstimator, VaderSentimentExtractor

from textplumber.preprocess import SpacyPreprocessor

from textplumber.embeddings import Model2VecEmbedder

from textplumber.core import get_stop_words

from sklearn.pipeline import Pipeline, FeatureUnion

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report

from sklearn.model_selection import train_test_splitvader

Textplumber implements functionality to score texts and extract features using VADER, “a lexicon and rule-based sentiment analysis tool”. VADER’s GitHub repository and Hutto and Gilbert’s 2014 paper about VADER are the best explanation of VADER and how the VADER lexicon and rules were derived.

Textplumber’s vader functionality for scoring text and extracting features was released in version 0.0.9. This includes:

VaderSentimentEstimator: a pipeline compoment to score or assign labels to texts with VADER

VaderSentimentExtractor: a pipeline compoment to extract VADER scores as features for machine learning models

The following experimental functionality is new in textplumber>=0.0.10 (the most recent version of Textplumber is recommended):

SentimentIntensityInterpreter: a class to implement VADER scoring with functionality to aide interpretation of the scores

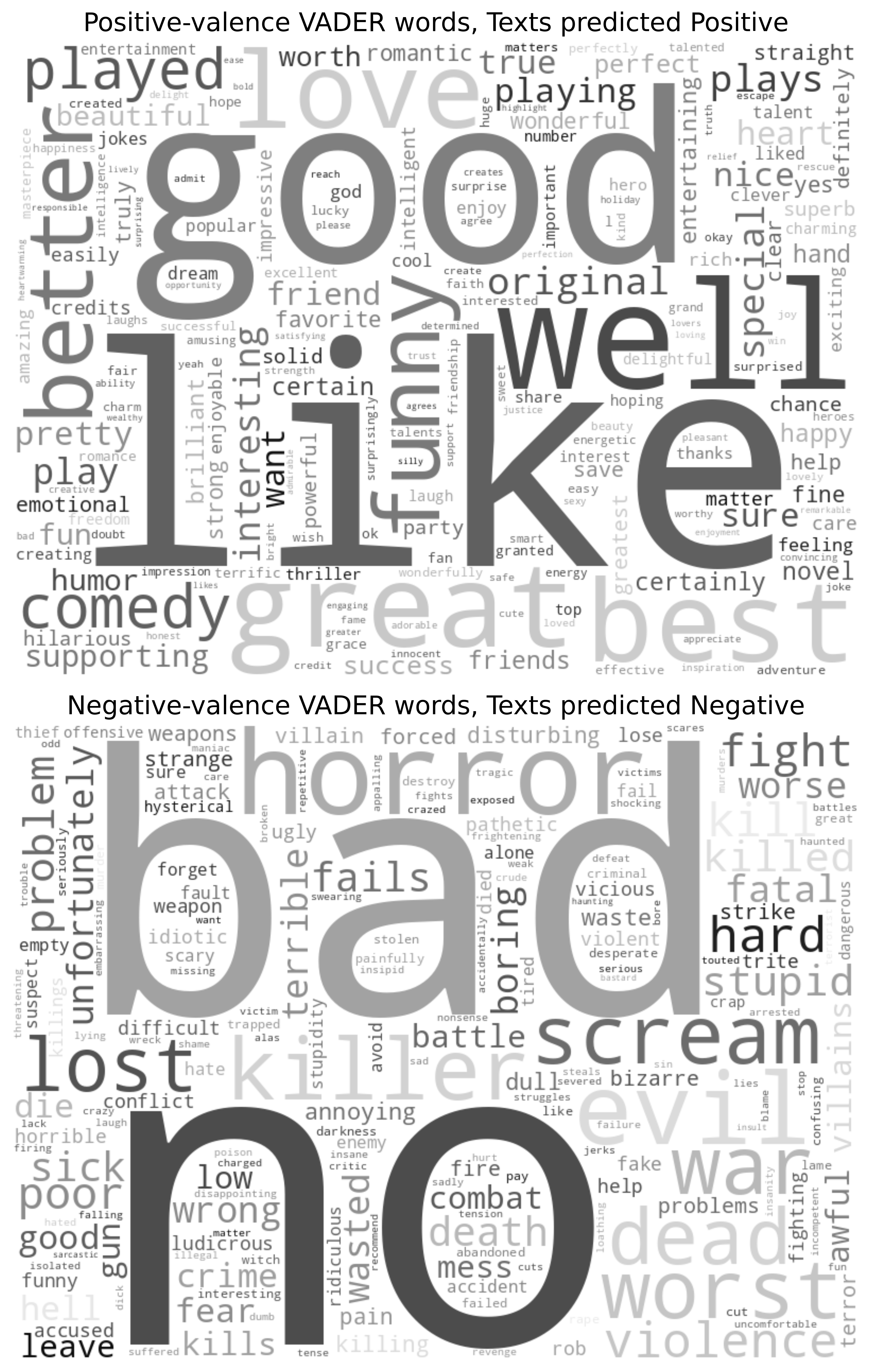

sentiment_wordcloud: a function to create a wordcloud of salient words from VADER’s lexicon across a corpus

VaderSentimentProfileExtractor: a pipeline component to extract multiple VADER sentiment scores within text documents as features for machine learning models

VaderSentimentPOSNgramsExtractor: a pipeline component to augment n-grams of parts-of-speech tags with VADER scores as features for machine learning models

Textplumber components to extract features or score texts using VADER

VaderSentimentExtractor

VaderSentimentExtractor (feature_store:textplumber.store.TextFeatureStor e=None, output:str='polarity', neutral_threshold:float=0.05)

Sci-kit Learn pipeline component to extract sentiment features using VADER.

| Type | Default | Details | |

|---|---|---|---|

| feature_store | TextFeatureStore | None | (not implemented currently) |

| output | str | polarity | ‘polarity’ (VADER’s compound score), ‘proportions’ (ratios for proportions of text that are positive, neutral or negative), or ‘allstats’ (equivalent to ‘polarity’ + ‘proportions’), ‘labels’ (positive, neutral, negative) |

| neutral_threshold | float | 0.05 | threshold for neutral sentiment |

By default neutral_threshold is set to 0.05. This means that any text with polarity greater than -0.05 and less than 0.05 will be ‘neutral’. The 0.05 value default is the recommendation of the VADER Github page, but this can be tuned as needed.

VaderSentimentExtractor.fit

VaderSentimentExtractor.fit (X, y=None)

Fit is implemented, but does nothing.

VaderSentimentExtractor.convert_score_to_label

VaderSentimentExtractor.convert_score_to_label (score:float, label_mapping=None)

Convert VADER score to label.

VaderSentimentExtractor.convert_scores_to_labels

VaderSentimentExtractor.convert_scores_to_labels (scores:list[float], label_mapping=None)

Convert VADER score to label.

VaderSentimentExtractor.transform

VaderSentimentExtractor.transform (X)

Extracts the sentiment from the text using VADER.

VaderSentimentExtractor.get_feature_names_out

VaderSentimentExtractor.get_feature_names_out (input_features=None)

Get the feature names out from the model.

VaderSentimentEstimator

VaderSentimentEstimator (output:str='labels', neutral_threshold:float=0.05, label_mapping:dict|None=None)

Sci-kit Learn pipeline component to predict sentiment using VADER.

| Type | Default | Details | |

|---|---|---|---|

| output | str | labels | ‘polarity’ (VADER’s compound score) or ‘labels’ (positive, neutral, negative) |

| neutral_threshold | float | 0.05 | threshold for neutral sentiment (see note for VaderSentimentExtractor) |

| label_mapping | dict | None | None | (ignored if labels is None) mapping of labels to desired labels - keys should be ‘positive’, ‘neutral’, ‘negative’ and values should be desired labels |

If output is set to labels then VaderSentimentEstimator functions as a pseudo-classifier. With output set to polarity, it functions as a regressor or scorer.

By default the VaderSentimentEstimator is setup to work with three classes (i.e. it returns ‘positive’, ‘neutral’ or ‘negative’). If you only have two classes (negative/positive), set the neutral_threshold to 0 to remove the neutral class. Even slightly negative or positive scores will be assigned a non-neutral label. A 0 polarity score will be assigned to positive in the two-class case. The classes might be better interpreted as negative and not-negative in this instance.

neutral_threshold = 0You may also want/need to create a label mapping so that the correct class IDs are returned by the estimator.

For example …

label_mapping = {

'positive': 1,

'negative': 0

}VaderSentimentEstimator.predict

VaderSentimentEstimator.predict (X)

Predict the sentiment of texts using VADER.

Example using VADER as an estimator

Here is an example demonstrating how to use VaderSentimentEstimator in a pipeline.

Here we load text samples from a sentiment dataset. The dataset has validation and test sets, but we are just working with the train split in this instance.

dataset_name = 'cardiffnlp/tweet_eval'

dataset_dir = 'sentiment'

dataset = load_dataset(dataset_name, data_dir = dataset_dir, split='train')Only getting 5000 for each class …

label_column = 'label'

target_names = get_label_names(dataset, label_column)

target_classes = list(range(len(target_names)))

# selecting 5000 per class here ...

df = dataset.to_pandas()

sampled_dfs = [

group.sample(n=5000, random_state=42)

for _, group in df.groupby('label')

]

df = pd.concat(sampled_dfs, ignore_index=True)

X = df['text']

y = df[label_column]X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y)pd.set_option('display.max_colwidth', 200)

df = pd.DataFrame({'text': X_train, 'label': y_train})

df['label_name'] = df['label'].apply(lambda x: target_names[x])

df.head(5)| text | label | label_name | |

|---|---|---|---|

| 5497 | Harper walks. #Nats now have two men on with one out. Escobar will bat next. Still 8-7 Mets in the bottom of the 9th. | 1 | neutral |

| 12677 | May or may not be getting ready to watch the very first season EVER of Big Brother. @user I'm so ready to see what Julie looks like! | 2 | positive |

| 8037 | Patrick Leahy & Christian Bale together again. U.S. Senator to make his 2nd cameo in Batman movie in Dark Knight Rises | 1 | neutral |

| 6670 | "A smartphone review that the tech press needs to read twice, obviously great for consumers too: Moto G 3rd gen review | 1 | neutral |

| 79 | Armed with the tools of power & intimidation Harper is trying to steal our country. On October 19th, armed with pencils LET'S TAKE IT BACK. | 0 | negative |

Setup a label mapping so that the label values that match the dataset are returned rather than the default ‘positive’, ‘neutral’, ‘negative’ labels returned by VaderSentimentEstimator.

label_mapping = {

'negative': 0,

'neutral': 1,

'positive': 2

}Our pipeline only has one component! Notice that the label_mapping is passed to the estimator to ensure we return comparable labels to the data-set labels.

pipeline = Pipeline([

('vader_estimator', VaderSentimentEstimator(output = 'labels', label_mapping = label_mapping)),

], verbose=True)

display(pipeline)Pipeline(steps=[('vader_estimator',

VaderSentimentEstimator(label_mapping={'negative': 0,

'neutral': 1,

'positive': 2}))],

verbose=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| steps | [('vader_estimator', ...)] | |

| transform_input | None | |

| memory | None | |

| verbose | True |

Parameters

| output | 'labels' | |

| neutral_threshold | 0.05 | |

| label_mapping | {'negative': 0, 'neutral': 1, 'positive': 2} |

Note: VADER is based on a lexicon and heuristics that are independent of the training data. The fit call is not really required below (and it does nothing).

pipeline.fit(X_train, y_train)

y_pred = pipeline.predict(X_test)[Pipeline] ... (step 1 of 1) Processing vader_estimator, total= 0.0sLog some results for the final cell summary.

dataset_descriptor = 'tweet_eval sentiment dataset, 5000 rows per class randomly sampled from train split (per class - 4000 train, 1000 test)'

experiment_descriptor = 'Assigning labels using VADER'

results = save_results('results_example_vader.csv', pipeline, experiment_descriptor, dataset_descriptor, y_test, y_pred, target_classes, target_names, classifier_step_name = 'vader_estimator')Here are the results …

print(classification_report(y_test, y_pred, labels = target_classes, target_names = target_names, digits=3))

plot_confusion_matrix(y_test, y_pred, target_classes, target_names) precision recall f1-score support

negative 0.657 0.591 0.622 1000

neutral 0.533 0.392 0.452 1000

positive 0.514 0.702 0.594 1000

accuracy 0.562 3000

macro avg 0.568 0.562 0.556 3000

weighted avg 0.568 0.562 0.556 3000

Example using VADER as a feature extractor

Here is an example demonstrating how to use VaderSentimentExtractor in a pipeline. We will use the same dataset as above so we can compare results between predictions based on VADER and the predictions of a machine learning model that uses VADER statistics as features.

The VaderSentimentExtractor can return VADER’s compound polarity score (‘polarity’), proportions of positive/neutral/negative (‘proportions’), or all statistics (‘allstats’).

Note: when proportions are being returned by VADER, these do not make use of rules reflected in the compound polarity score. See VADER’s Github documentation for more information.

pipeline = Pipeline([

('vader_extractor', VaderSentimentExtractor(output = 'allstats')),

('classifier', LogisticRegression(max_iter = 5000, random_state=55))

], verbose=True)

display(pipeline)Pipeline(steps=[('vader_extractor', VaderSentimentExtractor(output='allstats')),

('classifier',

LogisticRegression(max_iter=5000, random_state=55))],

verbose=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| steps | [('vader_extractor', ...), ('classifier', ...)] | |

| transform_input | None | |

| memory | None | |

| verbose | True |

Parameters

| feature_store | None | |

| output | 'allstats' | |

| neutral_threshold | 0.05 |

Parameters

| penalty | 'l2' | |

| dual | False | |

| tol | 0.0001 | |

| C | 1.0 | |

| fit_intercept | True | |

| intercept_scaling | 1 | |

| class_weight | None | |

| random_state | 55 | |

| solver | 'lbfgs' | |

| max_iter | 5000 | |

| multi_class | 'deprecated' | |

| verbose | 0 | |

| warm_start | False | |

| n_jobs | None | |

| l1_ratio | None |

pipeline.fit(X_train, y_train)

y_pred = pipeline.predict(X_test)[Pipeline] ... (step 1 of 2) Processing vader_extractor, total= 1.0s

[Pipeline] ........ (step 2 of 2) Processing classifier, total= 0.1sLogging results for summary …

experiment_descriptor = 'Logistic Regression classifier using VADER features'

results = save_results('results_example_vader.csv', pipeline, experiment_descriptor, dataset_descriptor, y_test, y_pred, target_classes, target_names, classifier_step_name = 'classifier')As might be expected, the performance of a classifier based on statistical output from VADER is similar to the results of the first experiment, which used VADER to assign the class …

print(classification_report(y_test, y_pred, labels = target_classes, target_names = target_names, digits=3))

plot_confusion_matrix(y_test, y_pred, target_classes, target_names) precision recall f1-score support

negative 0.660 0.632 0.646 1000

neutral 0.527 0.493 0.510 1000

positive 0.570 0.631 0.599 1000

accuracy 0.585 3000

macro avg 0.586 0.585 0.585 3000

weighted avg 0.586 0.585 0.585 3000

It is anticipated that the VaderSentimentExtractor component will be used alongside other features. Here is an example where the VADER statistics are supplemented by other features.

Setup a feature store to save preprocessed texts …

feature_store = TextFeatureStore('feature_store_example_vader.sqlite')Augment the VADER features with embeddings …

pipeline = Pipeline([

('preprocess', SpacyPreprocessor(feature_store=feature_store)),

('features', FeatureUnion([

('vader', VaderSentimentExtractor(output = 'allstats')),

('embeddings', Model2VecEmbedder(feature_store=feature_store)),

], verbose=True)),

('classifier', LogisticRegression(max_iter = 5000, random_state=55)),

], verbose=True)

display(pipeline)Pipeline(steps=[('preprocess',

SpacyPreprocessor(feature_store=<textplumber.store.TextFeatureStore object at 0x7737f274ab90>)),

('features',

FeatureUnion(transformer_list=[('vader',

VaderSentimentExtractor(output='allstats')),

('embeddings',

Model2VecEmbedder(feature_store=<textplumber.store.TextFeatureStore object at 0x7737f274ab90>))],

verbose=True)),

('classifier',

LogisticRegression(max_iter=5000, random_state=55))],

verbose=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| steps | [('preprocess', ...), ('features', ...), ...] | |

| transform_input | None | |

| memory | None | |

| verbose | True |

Parameters

| feature_store | <textplumber....x7737f274ab90> | |

| pos_tagset | 'simple' | |

| model_name | 'en_core_web_sm' | |

| disable | ['parser', 'ner'] | |

| enable | ['sentencizer'] | |

| batch_size | 200 | |

| n_process | 1 |

Parameters

| transformer_list | [('vader', ...), ('embeddings', ...)] | |

| n_jobs | None | |

| transformer_weights | None | |

| verbose | True | |

| verbose_feature_names_out | True |

Parameters

| feature_store | None | |

| output | 'allstats' | |

| neutral_threshold | 0.05 |

Parameters

| feature_store | <textplumber....x7737f274ab90> | |

| model_name | 'minishlab/potion-base-8M' | |

| batch_size | 5000 |

Parameters

| penalty | 'l2' | |

| dual | False | |

| tol | 0.0001 | |

| C | 1.0 | |

| fit_intercept | True | |

| intercept_scaling | 1 | |

| class_weight | None | |

| random_state | 55 | |

| solver | 'lbfgs' | |

| max_iter | 5000 | |

| multi_class | 'deprecated' | |

| verbose | 0 | |

| warm_start | False | |

| n_jobs | None | |

| l1_ratio | None |

pipeline.fit(X_train, y_train)

y_pred = pipeline.predict(X_test)[Pipeline] ........ (step 1 of 3) Processing preprocess, total= 26.4s

[FeatureUnion] ......... (step 1 of 2) Processing vader, total= 0.9s

[FeatureUnion] .... (step 2 of 2) Processing embeddings, total= 1.2s

[Pipeline] .......... (step 2 of 3) Processing features, total= 2.1s

[Pipeline] ........ (step 3 of 3) Processing classifier, total= 7.6sLogging results for summary …

experiment_descriptor = 'Logistic Regression classifier using VADER features and Model2Vec embeddings'

results = save_results('results_example_vader.csv', pipeline, experiment_descriptor, dataset_descriptor, y_test, y_pred, target_classes, target_names, classifier_step_name = 'classifier')print(classification_report(y_test, y_pred, labels = target_classes, target_names = target_names, digits=3))

plot_confusion_matrix(y_test, y_pred, target_classes, target_names) precision recall f1-score support

negative 0.704 0.725 0.714 1000

neutral 0.594 0.574 0.584 1000

positive 0.663 0.666 0.665 1000

accuracy 0.655 3000

macro avg 0.654 0.655 0.654 3000

weighted avg 0.654 0.655 0.654 3000

Do the VADER statistics contribute to the accuracy? In other words, could we remove the VADER stats for similar performance? This sanity check is run in the next cell. Results are shown in the results summary.

pipeline = Pipeline([

('preprocess', SpacyPreprocessor(feature_store=feature_store)),

('features', FeatureUnion([

('embeddings', Model2VecEmbedder(feature_store=feature_store)),

], verbose=True)),

('classifier', LogisticRegression(max_iter = 5000, random_state=55)),

], verbose=True)

pipeline.fit(X_train, y_train)

y_pred = pipeline.predict(X_test)

experiment_descriptor = 'Logistic Regression classifier using only Model2Vec embeddings (Sanity Check)'

results = save_results('results_example_vader.csv', pipeline, experiment_descriptor, dataset_descriptor, y_test, y_pred, target_classes, target_names, classifier_step_name = 'classifier')[Pipeline] ........ (step 1 of 3) Processing preprocess, total= 1.3s

[FeatureUnion] .... (step 1 of 1) Processing embeddings, total= 2.1s

[Pipeline] .......... (step 2 of 3) Processing features, total= 2.1s

[Pipeline] ........ (step 3 of 3) Processing classifier, total= 3.8sResults for the four experiments are shown below. The best model achieves an F1 score of 0.655. While much better performance is possible for sentiment classification and only a small amount of training data was used, the model with VADER statistics and embeddings outperformed:

- a model trained on embeddings alone

- a model trained on VADER’s statistical output

- the predictions of the VADER algorithm itself.

fields = ['experiment', 'accuracy_f1', 'negative_f1', 'neutral_f1', 'positive_f1']

display(pd.read_csv('results_example_vader.csv').sort_values(by='accuracy_f1', ascending=False)[fields])| experiment | accuracy_f1 | negative_f1 | neutral_f1 | positive_f1 | |

|---|---|---|---|---|---|

| 2 | Logistic Regression classifier using VADER features and Model2Vec embeddings | 0.655000 | 0.714286 | 0.583927 | 0.664671 |

| 3 | Logistic Regression classifier using only Model2Vec embeddings (Sanity Check) | 0.634333 | 0.680997 | 0.559836 | 0.659023 |

| 1 | Logistic Regression classifier using VADER features | 0.585333 | 0.645557 | 0.509561 | 0.598956 |

| 0 | Assigning labels using VADER | 0.561667 | 0.622105 | 0.451873 | 0.593658 |

Experimental: Textplumber functionality to aide interpretation of VADER scores

Note: this functionality will be released in Textplumber 0.0.10. To use it before this version is released, install Textplumber from the Github repository.

Textplumber includes functionality to aide interpretation of VADER’s scoring of individual texts, and functionality to visualize the salience of VADER lexicon words across multiple texts. This functionality is currently added in a form that may change in the future. There may be breaking changes related to this functionality in future releases, but it available for use now.

The functionality in this section was developed for the following paper:

Moses, J., Ford, G. See Spot save lives: fear, humanitarianism, and war in the development of robot quadrupeds. Digital War 2, 64–76 (2021). https://doi.org/10.1057/s42984-021-00037-y

SentimentIntensityInterpreter

SentimentIntensityInterpreter (lexicon_file:str='vader_lexicon.txt', emoji_lexicon:str='emoji_utf8_lexicon.txt' )

A class to aide interpretation of VADER scores.

| Type | Default | Details | |

|---|---|---|---|

| lexicon_file | str | vader_lexicon.txt | path to a custom lexicon file |

| emoji_lexicon | str | emoji_utf8_lexicon.txt | dictionary of emoji lexicon, if not provided, the default VADER emoji lexicon will be used |

SentimentIntensityInterpreter.polarity_scores

SentimentIntensityInterpreter.polarity_scores (text:str)

A method based on the VADER polarity_scores method that collates the lexicon words influencing the scoring of a text for improved interpretability.

SentimentIntensityInterpreter.explain

SentimentIntensityInterpreter.explain (text:str)

Prints a visual explanation of the text with each word’s colour gradients from red to green based on the word score, with indicators for compound score and proportions of positive, negative and neutral.

Functionality to aide interpreting VADER scores

Textplumber adds a class that duplicates all VADER functionality and adds some additional functionality to improve interpretability.

The SentimentIntensityInterpreter.polarity_score method is based on VADER’s polarity_score method. The functionality added collates the lexicon words influencing overall sentiment scores and the scores VADER assigns to them. The changes to the polarity_score method from the original polarity_score method are minor and do not affect how VADER scores a text. You can check the source code for the SentimentIntensityInterpreter class to see the annotated changes.

Note: The VADER code was authored by C.J. Hutto and was published under a MIT license. If you use Textplumber’s VADER functionality, please cite the original VADER paper and Texplumber.

Explain VADER scores for a text

The SentimentIntensityInterpreter.explain method outputs the text with a visual representation of the the words that influenced the sentiment score, as well as visual indicators of the overall VADER compound score and the proportions of positive, negative, and neutral words in the text. You can hover over words or the proportion bars for the specific scores. Words that are negated are shown with a ⛔ symbol. Words that are boosted or dampened by modifiers are shown with ⬆️ or ⬇️. VADER uses the textual version of emojis to assign sentiment scores. This text is added in after the emoji in italics to show the text used for the sentiment score.

vader_interpreter = SentimentIntensityInterpreter()

for text in test_strings[:4]:

vader_interpreter.explain(text)Visualize the salience of VADER lexicon words across multiple texts

sentiment_wordcloud

sentiment_wordcloud (texts:list[str], plot_mode:str|None=None, max_words:int=200, neutral_threshold:float=0.05, font_path:str=None)

Generates a word cloud indicating the salience of VADER lexicon words across a list of texts. Note: this is new functionality and is likely to change in future releases.

| Type | Default | Details | |

|---|---|---|---|

| texts | list | ||

| plot_mode | str | None | None | select how the plot is generated, must be one of ‘class_valence’ or None (default), ‘class’, or ‘valence’ (there are additional notes below) |

| max_words | int | 200 | |

| neutral_threshold | float | 0.05 | threshold for neutral sentiment scores, default is 0.05 |

| font_path | str | None | path to a font file for the word cloud |

| Returns | None |

The sentiment_wordcloud function is intended to aide identification of the words in VADER’s lexicon that are influencing sentiment across multiple texts. The plot produces two word clouds, one positive and one negative. The size of each word indicates its frequency and the shade of each word indicates the average intensity of the word’s valence when used to score the texts. A word close to black indicates very positive or very negative valence. The lighter the shade of gray used, the lower the average intensity when that word was used.

There are three modes for the word cloud:

class_valence: This mode bases the plot on agreement between VADER’s predicted class of the text (‘positive’ or ‘negative’) and the valence of the word. In other words, it will show you the words that are pushing VADER to classify the text as positive or negative.class: This mode bases the plot on the most common words from VADER’s lexicon for each predicted class regardless of each word’s valence.valence: This mode bases the plot on the valence of each word and ignores the predicted class. This shows you the most common positive and negative words from VADER’s lexicon in the texts.

The example below shows a word cloud based on a movie reviews data-set.

dataset_name = 'polsci/sentiment-polarity-dataset-v2.0'

dataset_dir = ''

dataset = load_dataset(dataset_name, data_dir = dataset_dir, split='train')

# sampling 100 texts per class

df = dataset.to_pandas()

sampled_dfs = [

group.sample(n=100, random_state=42)

for _, group in df.groupby('label')

]

df = pd.concat(sampled_dfs, ignore_index=True)

texts = df['text'].tolist()sentiment_wordcloud(texts, plot_mode = 'class_valence')

Experimental: Extract sentiment profile features (VADER scores across a document)

VaderSentimentProfileExtractor

VaderSentimentProfileExtractor (feature_store:textplumber.store.TextFeat ureStore=None, output:str='profile', profile_first_n:int=3, profile_last_n:int=3, profile_sample_n:int=4, profile_min_sentence_chars:int=10, profile_sections:int=10)

Sci-kit Learn pipeline component to extract document-level sentiment profiles consisting of document-level and sentence-level features with their order in the document represented using VADER. (This class is experimental and there may be breaking changes in the future).

| Type | Default | Details | |

|---|---|---|---|

| feature_store | TextFeatureStore | None | (not implemented currently) |

| output | str | profile | the feature output options are documented below. Valid values: ‘profile’ (default), ‘profileonly’, ‘profilesections’, ‘profileallstats’ |

| profile_first_n | int | 3 | number of sentences at start of doc to profile (ignored for ‘profilesections’) |

| profile_last_n | int | 3 | number of sentences at end of doc to profile (ignored for ‘profilesections’) |

| profile_sample_n | int | 4 | number of sentences to sample from doc sentences after first and last removed (ignored for ‘profilesections’) |

| profile_min_sentence_chars | int | 10 | minimum number of characters in body sentences for a sentence to be considered for the profile (ignored for ‘profilesections’) |

| profile_sections | int | 10 | number of sections to split the document into for profiling (for ‘profilesections’ only) |

VaderSentimentProfileExtractor extracts features from sentences or sections within longer documents. This is experimental functionality. There a number of different options for extracting features from a document:

profile(default): Extracts a document sentiment profile vector of VADER’s compound polarity score for the document, the proportion of positive, negative, and neutral across the whole document, and compound scores of sentences in the introduction, body and conclusion of the document.profileonly: Extracts a document sentiment profile vector based on the compound score of sentences in the introduction, body and conclusion of the document.profileallstats: Extracts a document sentiment vector of VADER’s compound polarity score for the document, the proportion of positive, negative, and neutral across the whole document, and compound scores and pos/neg/neu proportions of sentences in the introduction, body and conclusion of the document.profilesections: Extracts a document sentiment vector by pooling sentences from the document into sections. Mean scores are calculated for each section, withprofile_sectionsdetermining the number of sections per document.

VaderSentimentProfileExtractor.fit

VaderSentimentProfileExtractor.fit (X, y=None)

Fit is implemented, but does nothing.

VaderSentimentProfileExtractor.section_profile

VaderSentimentProfileExtractor.section_profile (text)

Mean pooling of VADER scores across document sections .

VaderSentimentProfileExtractor.profile

VaderSentimentProfileExtractor.profile (text:str, doc_level_scores:dict)

Create a document profile with VADER scores, which makes use of document level scores and sentence-level scores across the document.

| Type | Details | |

|---|---|---|

| text | str | the document text |

| doc_level_scores | dict | VADER scores for document text |

| Returns | list | a document profile vector consisting of the document level scores and sentence-level scores across the document |

VaderSentimentProfileExtractor.transform

VaderSentimentProfileExtractor.transform (X)

Extracts the sentiment from the text using VADER.

VaderSentimentProfileExtractor.get_feature_names_out

VaderSentimentProfileExtractor.get_feature_names_out (input_features=Non e)

Get the feature names out from the model.

VaderSentimentProfileExtractor.plot_sentiment_structure

VaderSentimentProfileExtractor.plot_sentiment_structure (X:list[str], y:list, target_c lasses:list=None , target_names:l ist=None, n_clus ters:int=5, samp les_per_cluster: int=5, renderer: str='svg')

Plot the sentiment structure of documents. Requires the VaderSentimentProfileExtractor to be instantiated with output=‘profilesections’ or ‘profileonly’. For each class, cluster the documents by sentiment structure into n_clusters, and plot up to samples_per_cluster. (Experimental feature, will change in future).

| Type | Default | Details | |

|---|---|---|---|

| X | list | ||

| y | list | ||

| target_classes | list | None | |

| target_names | list | None | |

| n_clusters | int | 5 | Number of clusters per class |

| samples_per_cluster | int | 5 | number of samples to plot per cluster |

| renderer | str | svg | ‘svg’ or ‘png’ |

Loading a dataset of longer texts to demonstrate …

dataset_name = 'polsci/sentiment-polarity-dataset-v2.0'

dataset_dir = ''

dataset = load_dataset(dataset_name, data_dir = dataset_dir, split='train')label_column = 'label'

target_names = get_label_names(dataset, label_column)

target_classes = list(range(len(target_names)))

df = dataset.to_pandas()

X = df['text']

y = df[label_column]dataset_descriptor = 'Movie Reviews dataset (Sentiment Polarity Dataset v2.0), train/test randomly sampled (80/20) from original train split'

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y)VaderSentimentProfileExtractor provides a way to visualise the sentiment structure of longer documents. This requires the output to be set to profilesections (for meanpooled scoring across the document) or profileonly (for scoring of sentences in the introduction, body and conclusion of the document).

VaderSentimentProfileExtractor(output = 'profileonly').plot_sentiment_structure(X, y, target_classes = target_classes, target_names = target_names)Below the VaderSentimentProfileExtractor is compared with VADER scoring of the whole text, using VaderSentimentEstimator, and a classifier that uses VADER statistics as features, using VaderSentimentExtractor.

pipelines = {

'Standard VADER algorithm': Pipeline([

('classifier', VaderSentimentEstimator(output = 'labels', neutral_threshold = 0, label_mapping = {'positive': 1, 'negative': 0})),

]),

'LR classifier using VADER document-level scores': Pipeline([

('vader_extractor', VaderSentimentExtractor(output = 'allstats')),

('classifier', LogisticRegression(max_iter = 5000, random_state=55))

]),

'LR classifier using profilesections':

Pipeline([

('vader_extractor', VaderSentimentProfileExtractor(output='profilesections')),

('classifier', LogisticRegression(max_iter = 5000, random_state=55))

]),

'LR classifier using profileonly':

Pipeline([

('vader_extractor', VaderSentimentProfileExtractor(output='profileonly')),

('classifier', LogisticRegression(max_iter = 5000, random_state=55))

]),

'LR classifier using profileallstats': Pipeline([

('vader_extractor', VaderSentimentProfileExtractor(output='profileallstats')),

('classifier', LogisticRegression(max_iter = 5000, random_state=55))

]),

'LR classifier using profile':

Pipeline([

('vader_extractor', VaderSentimentProfileExtractor(output='profile')),

('classifier', LogisticRegression(max_iter = 5000, random_state=55))

]),

}

for experiment_descriptor, pipeline in pipelines.items():

print(f"Running experiment: {experiment_descriptor}")

pipeline.fit(X_train, y_train)

y_pred = pipeline.predict(X_test)

results = save_results('results_example_vader_profiles.csv', pipeline, experiment_descriptor, dataset_descriptor, y_test, y_pred, target_classes, target_names, classifier_step_name = 'classifier')Running experiment: Standard VADER algorithm

Running experiment: LR classifier using VADER document-level scores

Running experiment: LR classifier using profilesections

Running experiment: LR classifier using profileonly

Running experiment: LR classifier using profileallstats

Running experiment: LR classifier using profileThe results of the experiments are summarised below. On the long texts used for this experiment, all profile-based classifiers using VaderSentimentProfileExtractor perform better than the two baselines (Standard VADER scoring, VADER document scores as classifier features). The best results are achieved for the profile default output option, which combined document-level scores with sentence-level scores across the document.

fields = ['experiment', 'accuracy_f1', 'neg_f1', 'pos_f1']

display(pd.read_csv('results_example_vader_profiles.csv').sort_values(by='accuracy_f1', ascending=False)[fields])| experiment | accuracy_f1 | neg_f1 | pos_f1 | |

|---|---|---|---|---|

| 5 | LR classifier using profile | 0.7150 | 0.710660 | 0.719212 |

| 3 | LR classifier using profileonly | 0.6850 | 0.688119 | 0.681818 |

| 2 | LR classifier using profilesections | 0.6775 | 0.692124 | 0.661417 |

| 4 | LR classifier using profileallstats | 0.6750 | 0.673367 | 0.676617 |

| 1 | LR classifier using VADER document-level scores | 0.6575 | 0.600583 | 0.700219 |

| 0 | Standard VADER algorithm | 0.6450 | 0.567073 | 0.699153 |

A plot of most discriminative features for the classifier using VaderSentimentProfileExtractor and the profile feature output option is included below. VADER scores (doc_positive, doc_negative, doc_neutral, and doc_compound) for the whole document are discriminative features. Sentiment scores for sentences from the conclusion are helpful to discriminate overall sentiment of the text relative to sentence scores for other parts of the texts.

plot_logistic_regression_features_from_pipeline(pipeline, target_classes, target_names, top_n=20, classifier_step_name = 'classifier', features_step_name = 'vader_extractor')| Feature | Log Odds (Logit) | Odds Ratio | |

|---|---|---|---|

| 3 | doc_positive | 2.244448 | 9.435208 |

| 1 | doc_negative | -1.303382 | 0.271612 |

| 2 | doc_neutral | -0.905903 | 0.404177 |

| 9 | conclusion_sentence_2 | 0.851913 | 2.344126 |

| 7 | conclusion_sentence_0 | 0.505986 | 1.658621 |

| 8 | conclusion_sentence_1 | 0.410974 | 1.508286 |

| 0 | doc_compound | 0.403546 | 1.497125 |

| 12 | body_sentence_sample_2 | 0.277697 | 1.320086 |

| 6 | introduction_sentence_2 | 0.262118 | 1.299679 |

| 10 | body_sentence_sample_0 | 0.202882 | 1.224928 |

| 5 | introduction_sentence_1 | 0.189453 | 1.208589 |

| 13 | body_sentence_sample_3 | 0.158868 | 1.172184 |

| 4 | introduction_sentence_0 | 0.054385 | 1.055891 |

| 11 | body_sentence_sample_1 | 0.033233 | 1.033791 |

Experimental: Extract sentiment-tagged ngrams as features

VaderSentimentPOSNgramsExtractor

VaderSentimentPOSNgramsExtractor (feature_store:textplumber.store.TextFe atureStore=None, output:str='sentimentposngrams', ngram_range:tuple=(2, 2))

Sci-kit Learn pipeline component to extract ngrams based on POS tags and sentiment from VADER lexicon. (This class is experimental and there may be breaking changes in the future, including the possibility of complete removal).

| Type | Default | Details | |

|---|---|---|---|

| feature_store | TextFeatureStore | None | (not implemented currently) |

| output | str | sentimentposngrams | sentimentposngrams or sentintensityposngrams, this is experimental and likely to change |

| ngram_range | tuple | (2, 2) | ngram range for POS ngrams |

VaderSentimentPOSNgramsExtractor.convert_score_to_token_label

VaderSentimentPOSNgramsExtractor.convert_score_to_token_label (score:flo at)

Convert VADER score to a token label (experimental).

VaderSentimentPOSNgramsExtractor.get_sentiment_pos_ngrams

VaderSentimentPOSNgramsExtractor.get_sentiment_pos_ngrams (text)

Get ngrams of POS features and lexicon+pos features (experimental).

VaderSentimentPOSNgramsExtractor.fit

VaderSentimentPOSNgramsExtractor.fit (X, y=None)

Fit derives all ngrams.

VaderSentimentPOSNgramsExtractor.transform

VaderSentimentPOSNgramsExtractor.transform (X)

Transform into sentiment ngrams.

VaderSentimentPOSNgramsExtractor.get_feature_names_out

VaderSentimentPOSNgramsExtractor.get_feature_names_out (input_features=N one)

Get the feature names out from the model.